In recent months, we have seen growing excitement around the role of artificial intelligence in hardware design.

It is not hard to understand why.

Increasingly advanced machine learning models are now capable of generating architectures, optimising layouts, and accelerating processes that, until recently, required intensive work from engineering teams.

But while many celebrate this growing automation, at Detus we look at this phenomenon from a different perspective.

We do not reject technological progress — quite the opposite. We have already explored, and continue to explore, ways to integrate AI into parts of our own hardware design process.

The problem does not lie in the technology itself, but in the illusion that is built around it.

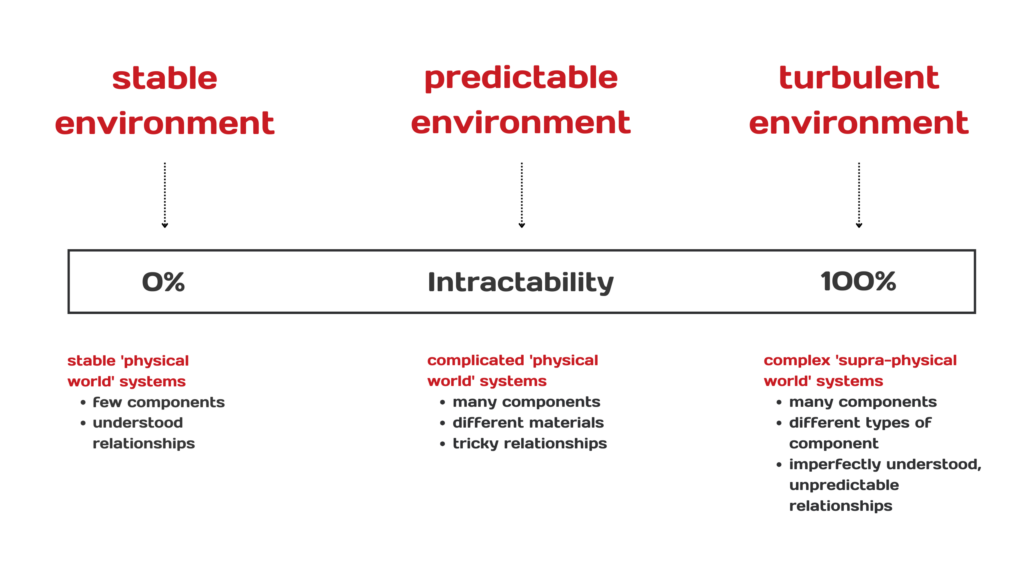

When you automate something you do not fully understand, complexity does not disappear. It simply shifts, becoming more subtle, less predictable, and much harder to control.

This is a well-known pattern in other industries, and one that is now beginning to manifest itself in hardware design as well.

Source: Sketchplanatioms

This is what technical publications refer to as the Automation Paradox, a phenomenon where the more we automate complex systems, the more we depend on highly qualified engineers to supervise, understand, analyse, and diagnose them when they fail.

We believe they will fail, because we are entering a new phase in hardware design where AI can generate solutions that even engineers themselves do not fully understand.

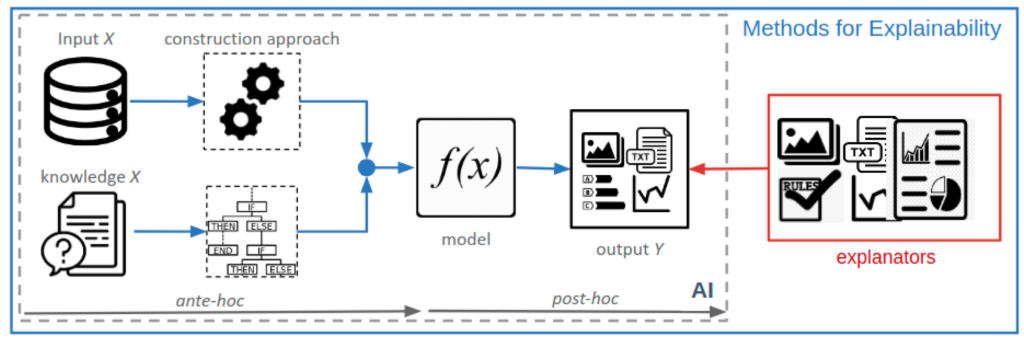

The lack of explainability in generative models applied to physical design is already recognised as one of the greatest challenges in critical systems.

When these solutions fail, as they inevitably will, deep knowledge will be required to diagnose, analyse, and resolve them. Those without this foundation will be left without tools.

This is why we state clearly that the AI boom will not simplify electronic engineering.

In many respects, it will make it more complex than ever, and will demand an even greater level of technical rigour from teams.

The current state of AI in hardware design

In January 2025, Popular Mechanics published an article about a recent breakthrough in chip design. Researchers at Princeton University used conventional neural networks to design wireless chips that outperformed current models.

The most impressive part was not the technical result, but the fact that many of the engineers involved admitted they did not fully understand the decisions made by the AI during the development process.

This is not an isolated case. In a recent podcast by Siemens Digital Industries Software, specialists warn that the growing use of AI in PCB design is already introducing a new type of opacity into engineering processes, what engineers call a black box. These are systems where only the inputs and outputs are known, and their internal operation is opaque and difficult to audit.

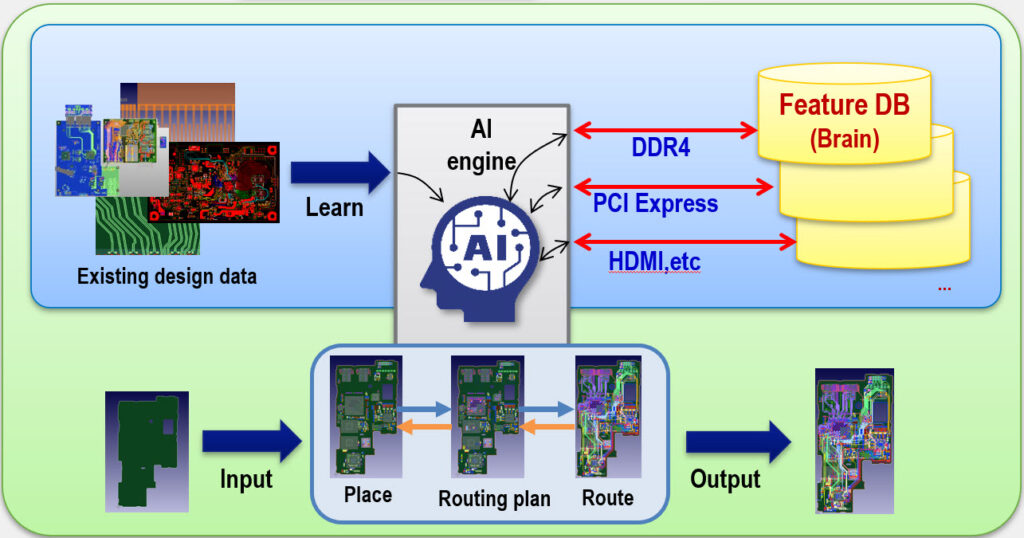

Generative models today can define routing, distribute power planes, suggest stack-up configurations, and optimise signal integrity with an efficiency that is impossible to achieve manually.

This risk is also identified in recent studies on Explainable Hardware (XHW), which show how the lack of transparency in AI-generated systems compromises the ability to analyse, diagnose, and correct them later.

When physical failures occur, such as electromagnetic compatibility issues, unwanted couplings between planes, signal distortions, or unexpected thermal effects, diagnosis becomes significantly more difficult. Teams will need to resolve anomalies in systems whose internal logic they do not master.

These are some of the risks that the evolution of artificial intelligence brings to hardware design.

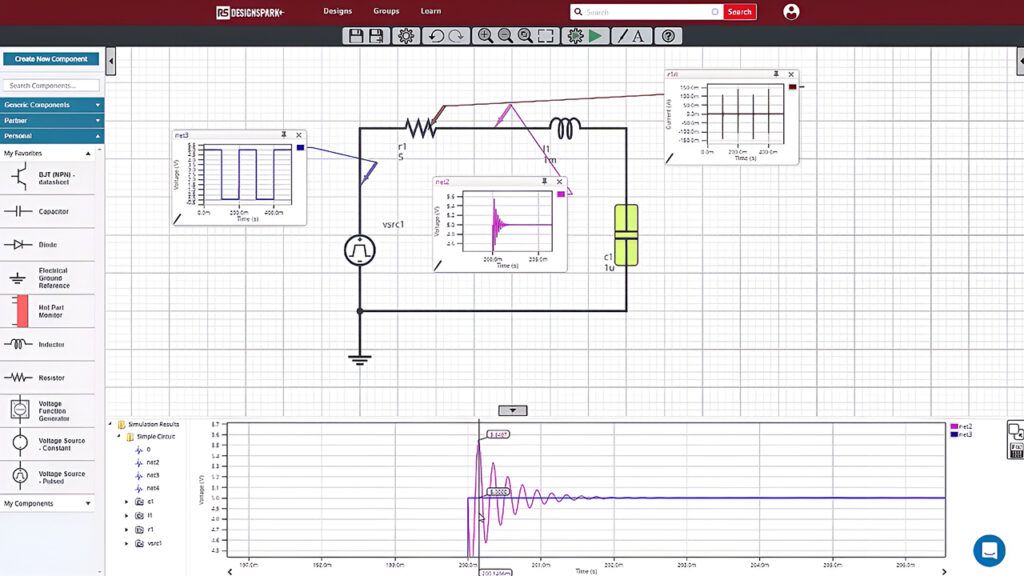

A system that behaves correctly in a simulation does not guarantee the same result in the real world. If no one understands how the optimisation was performed, the time required to solve problems can increase exponentially.

What changes in hardware with Artificial Intelligence

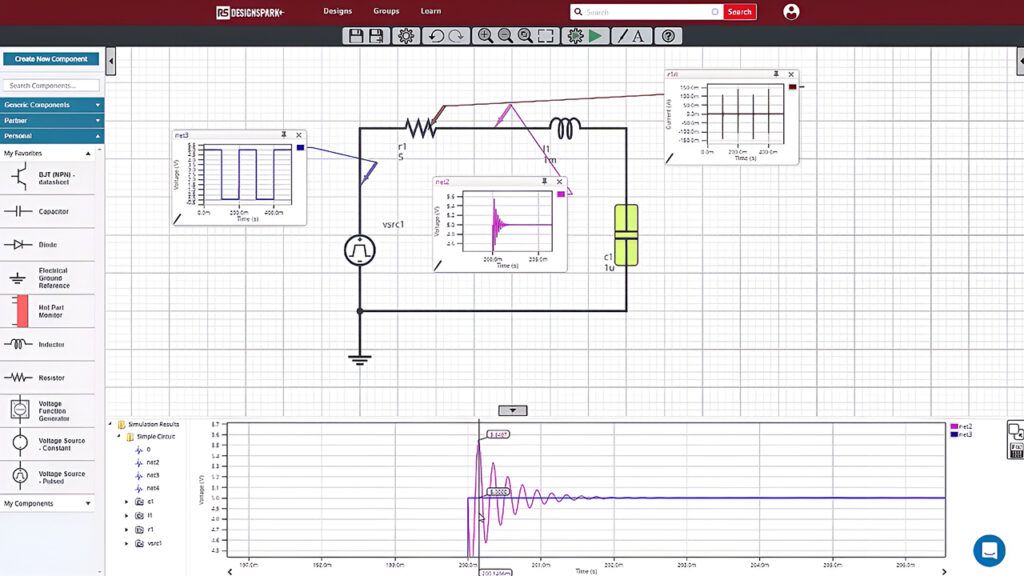

Today, PCB design remains a deeply technical process.

Each stage requires understanding, control, and validation. No automated development tool can, by itself, replace the engineering knowledge that supports this process.

A PCB is not just a functional circuit, it is also a complex physical system, subject to phenomena that do not immediately appear in simulation, such as:

-

Electromagnetic interference

-

Signal integrity

-

Common-mode noise

-

Thermal management

-

High-frequency coupling effects

All of this requires rigorous analysis and validation.

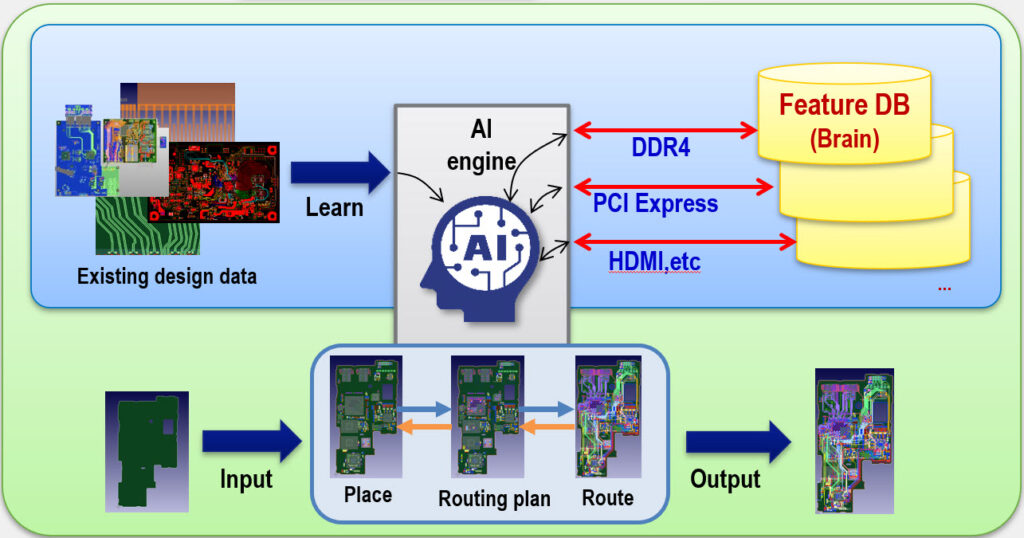

In recent years, we have seen increasing integration of artificial intelligence into PCB design tools.

Some machine learning models today are capable of generating complex routes, optimising layouts, and even predicting, to some extent, EMI problems.

According to a study published in Embedded, AI is increasingly being used to optimise processes that previously depended on human intervention, both in manufacturing and in design.

Tools like those from Siemens EDA already allow the use of artificial intelligence to optimise the distribution of power planes, reduce unwanted couplings, and minimise radiated emissions. This is a real advancement, with great potential.

But here lies the real risk. As these tools become more powerful and accessible, there is a growing temptation to automate critical stages of design without ensuring that teams fully understand the underlying principles.

This is precisely the danger of automating PCB development.

By allowing a design to be created without deep understanding of the principles involved, there is a risk of building systems that, when they do not perform as expected, become almost impossible to diagnose.

Diagnosing and correcting failures remains, and will remain, an unavoidable stage in hardware development. No AI tool will eliminate the need to deeply understand the physical behaviour of the system.

Anyone who believes that the future of PCB design will simply be about generating a functional layout, without mastering the fundamentals, will end up facing much more difficult challenges to solve.

The risk: the new profile of professionals

As automated hardware development tools become more accessible, it is inevitable that a new generation of professionals will emerge who have never fully studied the fundamentals of electronic design.

This is, in fact, one of the most discussed phenomena in the context of the Automation Paradox.

The more we automate critical tasks, the greater the risk that future generations will lose touch with the principles that enable them to understand, validate, and analyse the systems they are building.

We are already seeing signs of this trend. Many new professionals enter the market with strong fluency in AI tools.

They know how to generate effective prompts, interpret superficial results, automate stages of the process. But they often have serious gaps in fundamental areas such as high-frequency signal analysis, noise control, thermal management, or electromagnetic compatibility.

In an ideal scenario, these tools would complement solid technical knowledge. But in practice, we are starting to see teams that depend almost entirely on automated generation.

When problems arise, as they always do, these teams show great difficulty in identifying the causes.

Issues such as signal reflections, unwanted resonances, unexpected couplings, impedance variations, changes in power integrity, or common-mode interference cannot be detected by a simple superficial analysis of an AI-generated layout. They require a deep understanding of the electromagnetic behaviour of systems.

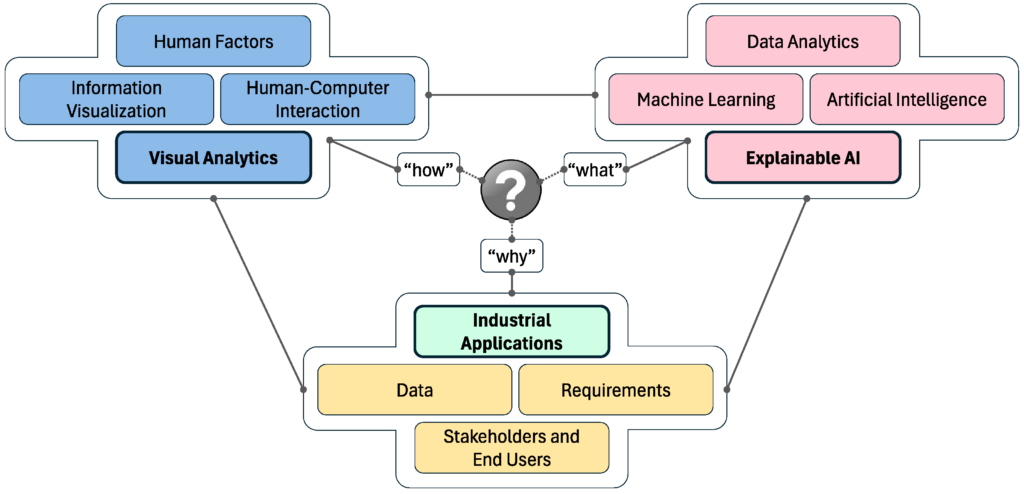

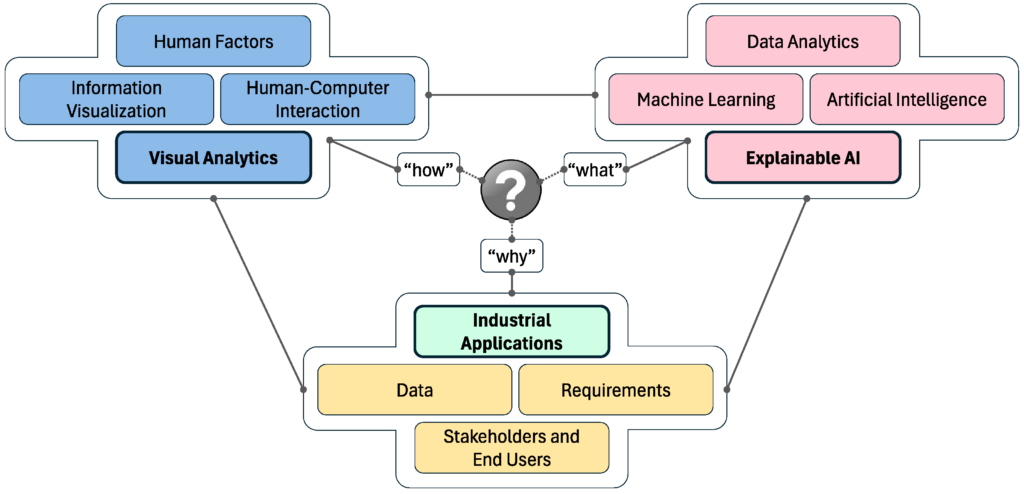

This concern is also reflected in studies on Explainable AI applied to critical systems. As IBM points out, the lack of explainability directly compromises the reliability and validation capability of advanced systems.

Source

This is exactly the risk that the AI boom brings to hardware design. It is not just about technology, it is about competencies.

Teams that lose touch with the fundamentals of engineering will inevitably produce systems that are fragile, inconsistent, and difficult to maintain.

At Detus, we consider this one of the most critical issues for the future of electronic engineering.

This is why we deliberately maintain a rigorous focus on continuous training and on strengthening the fundamental principles that sustain the quality of our projects.

The role of AI in hardware: where it makes sense, where it does not

At Detus, we do not hold a dogmatic view of artificial intelligence.

It is not a matter of being for or against. It is a matter of clearly understanding where these technologies add value, and where they introduce risks that cannot be ignored.

There are areas of PCB design where AI does indeed make a promising contribution.

Electromagnetic compatibility analysis is a clear example. Models trained with real data can complement physics-based simulations, helping to predict signal propagation effects in complex situations, which are not always well captured by classical models.

As a recent article in Embedded points out, AI is already being effectively integrated into layout optimisation processes and advanced manufacturing stages, accelerating tasks that would otherwise require long cycles of manual iteration.

Tools like Siemens EDA also now offer advanced capabilities to reduce unwanted couplings, optimise the distribution of power planes, and minimise radiated emissions.

These are areas where AI acts as a true amplifier of the engineering team’s capabilities.

Another important aspect is the competitive advantage that large companies with a vast history of PCB projects can leverage. By creating well-structured and carefully selected internal datasets, they can train models tailored to their own design standards, preserving consistency and reliability in results.

However, there are limits that “must not be crossed”.

System architecture, interface definition, control of safety margins, planning of signal integrity and thermal management strategies continue to require deep human knowledge.

No generative model can reliably make these decisions autonomously and in an explainable manner.

As current frameworks on Explainable AI and Explainable Hardware emphasise, the lack of transparency in AI-generated systems is a critical risk in domains where reliability cannot be compromised.

In short, AI should be used as a tool to accelerate and support the engineer, never as a substitute for sound engineering. This is, and will continue to be, the approach we follow at Detus.

Why complexity in Hardware Design will increase with AI

There is a recurring illusion when people talk about automation. The idea that automating a process automatically makes it simpler. In the case of hardware design, this premise is not only false, it is dangerous.

When automated development tools are introduced into the design process, complexity does not disappear. It transforms. It becomes harder to visualise, harder to control, harder to explain.

This phenomenon is well described in the concept of Shift in Complexity. Complexity is not eliminated by automation, it merely shifts from one phase of the lifecycle to another. In the case of PCB design, it shifts from the creation phase to the validation, debugging, and maintenance phases.

Solutions generated by artificial intelligence may, on the surface, appear optimised. But this optimisation is not always transparent. The generated architecture may hide cross-dependencies, unwanted effects, or weaknesses that only appear under real operating conditions.

As a recent study published on arXiv on Explainable Hardware points out, the opacity of generative models applied to hardware is one of the main factors contributing to increased unpredictability in critical systems.

In addition, the development cycle itself becomes more opaque.

When a team is working on a system they do not fully understand, each incremental change increases the risk of unexpected regressions. Each validation phase takes longer, not because the tool is less capable, but because unpredictability increases.

Recent studies indicate that, in highly automated environments, the cost of corrections associated with explainability failures can greatly exceed the gains achieved during the development phase.

This is why we state with complete conviction that the Artificial Intelligence boom will not simplify hardware design. It will increase its complexity, requiring even better-prepared engineers with an even stronger command of the fundamentals.

The real risk is not in the technology. It lies in the belief that it can replace human knowledge. Those who follow that path will end up facing systems they cannot master.

How teams should prepare

Faced with this new reality, it is not enough to adopt AI tools enthusiastically.

It is necessary to consciously establish a strategy to ensure that fundamental knowledge is not lost, and that the complexity introduced by new tools remains under control.

First, teams should maintain a modular and understandable approach to design. Each functional block should be well defined, documented, and tested in isolation.

Automating creation can accelerate specific tasks, but it should never replace full control over system architecture.

Second, it is crucial to reinforce training in core engineering.

Mastery of applied electromagnetism, electromagnetic compatibility, high-frequency signal analysis, noise control, and thermal management will continue to be indispensable.

No AI tool eliminates the need to understand the underlying physics.

As the authors of the Explainable Hardware framework point out, ensuring that AI-supported hardware design remains explainable is a critical responsibility for engineering teams. It requires more robust validation processes and increased rigour in technical documentation.

Third, teams should develop more robust validation processes.

As complexity increases, systematic verification based on real tests becomes even more important. Simulation tools should be complemented with rigorous physical measurements under representative conditions.

Finally, organisations with a strong history of successful projects will be able to take advantage of creating proprietary datasets.

Models trained with real company data will allow AI to be integrated in a more controlled way, preserving standards and ensuring greater predictability in results.

As IBM also reinforces in its Explainable AI principles, integrating AI in critical domains requires engineers to remain at the centre of the decision-making process. AI should act as an amplifier, never as a substitute.

At Detus, we believe that the future of hardware design depends precisely on this balance. Highly qualified teams using AI to enhance their capabilities, without ever giving up deep understanding of the systems they develop.

Conclusion

Artificial intelligence is undoubtedly transforming hardware design. Its automatic generation capabilities open new possibilities, accelerate processes, and challenge established working models.

But this evolution also brings risks that cannot be ignored. When you automate something you do not understand, complexity does not disappear. It transforms, becoming harder to detect, harder to analyse and diagnose, harder to control.

As the Automation Paradox shows, the more advanced the tools become, the greater the responsibility of engineers. The lack of explainability in critical systems such as hardware is a risk that no responsible company can accept.

The real challenge for technical teams is not to resist adopting AI. It is to integrate it in a careful, critical, conscious way, with robust processes and with a constant reinforcement of fundamental knowledge. Tools evolve, but physics does not change.

At Detus, we continue to believe that hardware engineering demands a deep command of the principles that govern system behaviour. AI can and should be used to amplify this knowledge, never as a shortcut.

The future of hardware design will inevitably be more complex. The teams that know how to prepare for this complexity will be the ones that lead the next generation of innovation. They will also be the ones who ensure that the products we bring to market continue to deserve the trust of customers.